Key Takeaways

- Data-Driven Decisions: A/B testing empowers you to make informed, data-driven decisions by experimenting with changes and measuring their impact on conversions.

- Continuous Improvement: A/B testing isn’t a one-time effort; it’s an ongoing process. Consistently optimize your digital experiences to stay ahead in the competitive online landscape.

- Personalization Matters: Advanced strategies like personalization and segmentation can significantly enhance your conversion rates by tailoring content and experiences to individual user preferences.

In the dynamic and ever-evolving realm of digital marketing, the quest for higher conversion rates is nothing short of a perpetual adventure.

Imagine having the power to tweak the colour of a button, rephrase a headline, or adjust the placement of an image on your website, all with the potential to significantly boost your conversion rates.

Sounds too good to be true, right?

Well, not quite.

Welcome to the fascinating world of A/B testing, a potent tool that empowers marketers and website owners to unlock the secrets of user behaviour, optimize their online experiences, and ultimately drive better conversions.

Whether you’re an e-commerce enthusiast aiming to increase your sales, a content creator looking to boost newsletter sign-ups, or a digital marketer seeking to enhance campaign performance, A/B testing holds the key to your success.

But what exactly is A/B testing, and why has it become an indispensable component of modern digital marketing strategies?

How can you harness its potential to propel your business to new heights?

What are the best practices and advanced strategies that can transform you into an A/B testing maestro?

You’ve come to the right place.

In this comprehensive exploration, we will embark on a journey to unravel the basics of A/B testing, equipping you with the knowledge, tools, and strategies needed to supercharge your conversion rates.

Together, we’ll delve deep into the intricacies of this data-driven technique, demystifying its core concepts and unveiling the myriad ways it can benefit your online endeavours.

From understanding the fundamental principles of A/B testing to deciphering the nuances of hypothesis creation, test design, and result interpretation, we’ll leave no stone unturned.

You’ll gain insights into the art of optimizing your website or marketing campaigns, honing your digital presence, and achieving that elusive goal of converting more visitors into loyal customers.

But we won’t stop there.

We’ll also venture into the realm of advanced A/B testing strategies, exploring topics like personalization, segmentation, and mobile optimization.

These advanced techniques will enable you to take your A/B testing efforts to the next level, ensuring that your digital experiences are not only conversion-focused but also tailored to the unique needs and preferences of your audience.

Moreover, in an age where ethical considerations and user privacy are paramount, we’ll discuss the ethical implications of A/B testing and guide you on how to conduct tests responsibly, respecting user privacy and complying with relevant regulations.

So, whether you’re a seasoned marketer looking to fine-tune your strategies or a novice seeking to understand the basics, this journey into the world of A/B testing promises to be both enlightening and empowering.

By the time you reach the end of this blog, you’ll possess the knowledge and tools needed to embark on your own A/B testing adventures, armed with the confidence that you can unravel the mysteries of user behaviour and drive better conversions.

So, fasten your seatbelt, prepare to embark on an illuminating journey, and let’s dive deep into the world of A/B testing to uncover the secrets of better conversions.

Before we venture further, we want to share who we are and what we do.

About AppLabx

From developing a solid marketing plan to creating compelling content, optimizing for search engines, leveraging social media, and utilizing paid advertising, AppLabx offers a comprehensive suite of digital marketing services designed to drive growth and profitability for your business.

AppLabx is well known for helping companies and startups use SEO and Digital Marketing to drive web traffic to their websites and web apps.

At AppLabx, we understand that no two businesses are alike. That’s why we take a personalized approach to every project, working closely with our clients to understand their unique needs and goals, and developing customized strategies to help them achieve success.

If you need a digital consultation, then send in an inquiry here.

A/B Testing: Unraveling the Basics of Split Testing for Better Conversions

- Getting Started with A/B Testing

- The A/B Testing Process

- Best Practices for Effective A/B Testing

- Interpreting A/B Testing Results

- Advanced A/B Testing Strategies

1. Getting Started with A/B Testing

Setting Clear Objectives

Before diving headfirst into A/B testing, it’s crucial to define clear objectives for your experiments.

What do you aim to achieve with A/B testing?

Is it higher click-through rates, increased e-commerce sales, or improved user engagement?

Setting specific, measurable, achievable, relevant, and time-bound (SMART) objectives is key to successful A/B testing.

Example:

Let’s say you run an e-commerce website, and your objective is to boost the conversion rate of your product page. Your SMART goal could be: “Increase the conversion rate of the product page by 10% within three months.”

Identifying Key Performance Indicators (KPIs)

A/B testing revolves around data and metrics.

Identifying the right Key Performance Indicators (KPIs) allows you to measure the impact of your experiments accurately.

Your KPIs should align with your objectives and help you track the changes you make during testing.

Example:

For the e-commerce website, relevant KPIs might include conversion rate, average order value, bounce rate, and click-through rate on the product page.

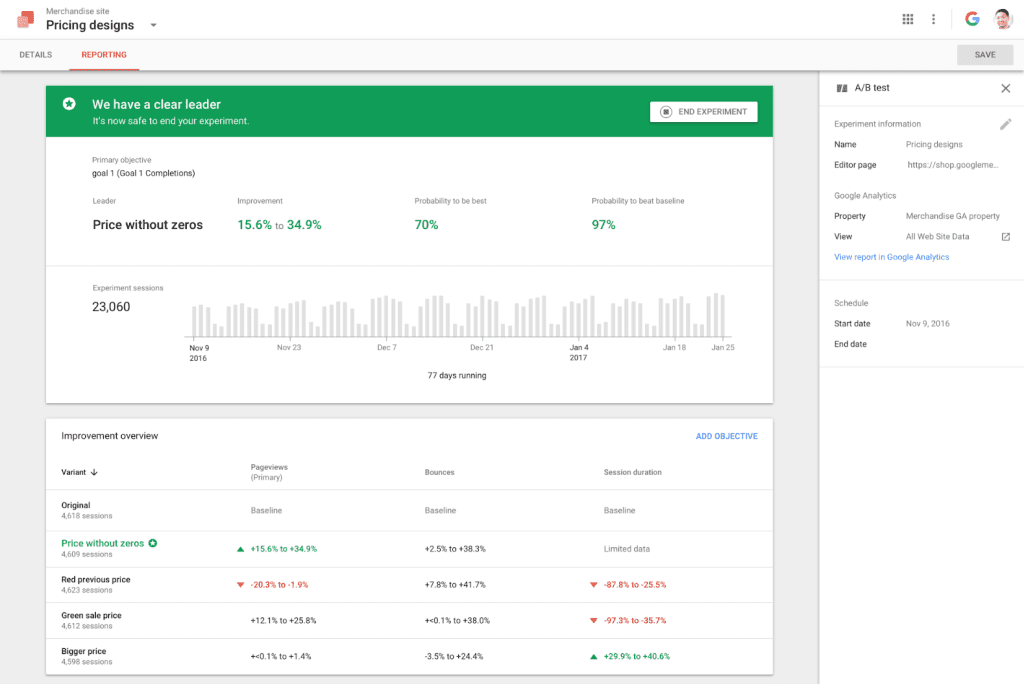

Tools and Software Selection

Selecting the right tools and software for A/B testing is crucial.

These tools facilitate test creation, data collection, and result analysis.

There are several powerful A/B testing platforms available, including Google Optimize, Optimizely, and VWO (Visual Website Optimizer).

Example:

If you’re just starting out and have a limited budget, Google Optimize offers a free version that can be a great way to begin your A/B testing journey. However, as your needs grow, you may consider premium tools like Optimizely or VWO for more advanced features and capabilities.

Randomization and Sample Size

Randomization is a core principle of A/B testing.

It ensures that your test groups are selected randomly, reducing bias and ensuring that your results accurately reflect user behaviour.

Additionally, determining the appropriate sample size is crucial to ensure statistical significance.

Example:

If your e-commerce website has 10,000 monthly visitors and you want to test a new product page design, you might choose to randomly show the new design to 5,000 visitors and the existing design to the other 5,000.

Test Duration

A/B tests should run for an appropriate duration to gather sufficient data and account for variations over time.

Short tests may not yield statistically significant results, while overly long tests may delay valuable improvements.

Example:

If you’re testing a change that you expect to have a significant impact on user behaviour, you might see results in as little as a few days.

However, for smaller changes, it’s advisable to run tests for at least two to four weeks to capture different user segments and behaviours.

Monitoring and Measuring

Throughout the testing period, it’s crucial to actively monitor the performance of your A/B tests.

Use analytics tools to track the chosen KPIs and measure the impact of the changes on user behaviour.

Example:

If your A/B test involves changing the colour of a call-to-action button on your website, you can monitor the click-through rate for both variations to see which colour performs better.

In the next section, we’ll delve deeper into the A/B testing process, exploring how to create hypotheses and design effective tests that align with your objectives and KPIs.

2. The A/B Testing Process

A/B testing is a structured process that involves careful planning, execution, and analysis to achieve meaningful insights and improve conversions.

Let’s break down the A/B testing process into its key components:

Hypothesis Creation and Test Design

Defining the Problem

Before creating an A/B test, you must identify a problem or opportunity for improvement.

What aspect of your website or marketing campaign needs optimization?

For instance, are you concerned about your email open rates being too low?

Formulating the Hypothesis

A hypothesis is the cornerstone of A/B testing. It’s a statement that defines the change you want to test and the expected outcome. It should be specific, testable, and based on data-driven insights.

Example:

Problem: Low email open rates for your newsletter.

Hypothesis: Changing the email subject line to include a compelling benefit statement will increase open rates by 10%-14%.

Implementation and Data Collection

Creating Variations (A and B)

For your A/B test, you’ll create two versions of the element you want to test (e.g., an email subject line, a webpage, or a product page).

Variation A is your control group, representing the current version, while Variation B is the experimental group, featuring the change you want to test.

Example:

In the email subject line A/B test, Variation A uses the current subject line, while Variation B has the new subject line with the benefit statement.

Splitting Traffic

Randomly splitting your website traffic or email recipients between Variations A and B is crucial to ensure unbiased results. This randomization minimizes the risk of external factors affecting the outcome.

Example:

If you have 10,000 email subscribers, send the original subject line to 5,000 subscribers (Variation A) and the new subject line to the other 5,000 (Variation B).

Drawing Conclusions and Identifying Winners

Statistical Significance

To determine if your test results are significant, you’ll need to analyze the data collected.

Statistical significance helps you ascertain if the observed differences between Variation A and Variation B are likely to be genuine and not due to chance.

Example:

If Variation B’s open rate is 20% higher than Variation A’s and this difference is statistically significant with a p-value of 0.05 or lower, you can conclude that the new subject line is more effective.

Identifying the Winning Variation

Once you’ve established statistical significance and collected enough data, you can identify the winning variation—the one that performed better.

This winning variation becomes the new control, and you can implement the change across your website or campaign.

Example:

In the email subject line A/B test, if Variation B (the new subject line) had a statistically significant higher open rate, it becomes the new control, and you use it for future emails.

By following this structured A/B testing process, you can make data-backed decisions that lead to improvements in your digital marketing efforts.

In the next section, we’ll delve into best practices for effective A/B testing, helping you avoid common pitfalls and optimize your testing approach for maximum impact.

3. Best Practices for Effective A/B Testing

When it comes to A/B testing, following best practices can make the difference between success and a less impactful experiment.

Here are some key guidelines to ensure your A/B testing efforts are effective:

Avoiding Common Pitfalls

Avoiding Sample Pollution

Sample pollution occurs when external factors, like website outages or special promotions, impact your test results.

Keep a vigilant eye on your test environment to minimize the risk of skewed data.

If your website experiences an unexpected downtime during an A/B test, the data for that period might be unreliable, affecting your test’s accuracy.

Testing One Variable at a Time

To pinpoint the exact cause of any changes in user behaviour, limit your A/B tests to one variable at a time. Testing multiple changes simultaneously can lead to confounding results.

Example:

If you’re testing a new webpage layout and changing the product description simultaneously, you won’t know which change caused the observed differences in conversion rates.

Continuous Optimization

Iterative Testing

A/B testing isn’t a one-time affair. Continuously optimize by conducting follow-up tests based on the learnings from previous experiments.

Small, iterative improvements often lead to substantial gains over time.

After improving the email subject line, you might test different email send times to further enhance open rates, gradually increasing engagement.

Segmentation

Segment your audience based on relevant criteria like demographics, location, or behaviour.

Tailor your A/B tests to specific segments to deliver more personalized experiences.

Segmenting your audience might reveal that a particular change resonates better with users in specific geographic regions, allowing for more targeted optimizations.

Multivariate Testing

Multivariate testing involves testing multiple variations of different elements on a single page simultaneously.

This approach is ideal when you want to understand how various changes interact with each other.

Example:

If you’re optimizing a product page, you might simultaneously test the product image, price, and product description to determine the ideal combination for maximum conversions.

By adhering to these best practices, you’ll not only increase the reliability of your A/B tests but also maximize their impact on your conversion rates.

In the next section, we’ll explore how to interpret A/B testing results, ensuring that you can make data-informed decisions to drive better conversions.

4. Interpreting A/B Testing Results

After running your A/B test, the next critical step is interpreting the results to make informed decisions about implementing changes.

Here’s how you can effectively analyze and interpret A/B testing results:

Understanding Statistical Significance

Statistical significance is a fundamental concept in A/B testing.

It tells you whether the observed differences between Variation A and Variation B are likely due to chance or if they are statistically meaningful.

Typically, a p-value of 0.05 or lower indicates statistical significance.

Example:

Suppose your A/B test on a new website design shows that Variation B has a 10% higher click-through rate compared to Variation A, with a p-value of 0.03.

This suggests that the change likely caused the increase and is statistically significant.

Learning from Failed Tests

Not all A/B tests yield positive results, and that’s perfectly normal.

Failed tests can provide valuable insights into what doesn’t work, guiding you away from ineffective strategies.

Example:

If you tested a new headline for a blog post and found that it resulted in a lower click-through rate than the original, you’ve learned that this particular headline style doesn’t resonate with your audience.

Scaling Successful Changes

When you identify a winning variation, it’s time to scale the successful change across your website or marketing campaigns.

However, proceed with caution and ensure that the results are consistent and sustainable.

After implementing a change in your email subject lines that resulted in a 20% increase in open rates, track this improvement over several campaigns to confirm that it’s a consistent and sustainable gain.

The Role of Confidence Intervals

Confidence intervals help you understand the range of values within which the true effect of your change is likely to fall.

It’s essential to consider confidence intervals alongside p-values to get a complete picture of your results.

Example:

If your A/B test reveals a 10% increase in conversion rates with a confidence interval of ±2%, you can be reasonably confident that the actual increase falls between 8% and 12%.

Segmentation Analysis

Sometimes, A/B testing results can vary among different segments of your audience. Analyze results by segment to gain insights into how different groups respond to changes.

Data Example:

If you conducted an A/B test on your e-commerce website’s checkout process and found that mobile users responded more positively to the change than desktop users, consider implementing the change for mobile users while maintaining the existing version for desktop users.

Continuous Monitoring and Validation

Even after implementing a successful change, it’s crucial to continuously monitor and validate the results over time. User behaviour and preferences can evolve, so ongoing testing and optimization are essential for sustained improvements.

After improving your website’s navigation menu and observing an initial increase in user engagement, continue to monitor these metrics to ensure that the positive impact persists.

By mastering the art of interpreting A/B testing results, you can confidently make data-driven decisions that drive better conversions.

In the next section, we’ll explore advanced A/B testing strategies, including personalization, cross-device testing, and mobile optimization, to further enhance your conversion optimization efforts.

5. Advanced A/B Testing Strategies

While the fundamentals of A/B testing are essential for optimizing conversions, advanced strategies can take your efforts to the next level.

Let’s explore some sophisticated techniques to enhance your A/B testing initiatives:

Personalization and Segmentation

Dynamic Content Personalization

Personalization involves tailoring content and experiences to individual users based on their preferences and behaviour. A/B testing can be used to refine personalized content delivery for maximum impact.

Example:

If you have an e-commerce website, you can A/B test personalized product recommendations for each user based on their browsing and purchase history, leading to higher conversion rates.

Segmentation-Based Testing

Segmentation divides your audience into distinct groups based on characteristics such as location, age, or past behaviour.

A/B testing within these segments allows you to optimize experiences for different audience segments.

If you’re an online retailer, you might find that offering location-specific promotions through segmentation significantly improves click-through and conversion rates in certain regions.

Cross-Device and Cross-Browser Testing

Cross-Device Compatibility

In an era where users access websites and apps from various devices, it’s crucial to ensure your content and design are consistent and optimized across devices. A/B testing can help identify and rectify issues related to cross-device compatibility.

Example:

A cross-device A/B test may reveal that a responsive design with an optimized mobile user experience results in a 15% increase in conversion rates for mobile users.

Cross-Browser Compatibility

Different web browsers can render websites differently. A/B testing for cross-browser compatibility ensures a consistent user experience, irrespective of the browser users prefer.

A cross-browser test might reveal that a particular JavaScript feature performs poorly on Internet Explorer, prompting you to optimize it for that browser and improve overall conversion rates.

Mobile A/B Testing

Mobile Optimization

As mobile usage continues to surge, mobile optimization is paramount. A/B testing specific to mobile devices can help you fine-tune mobile experiences for better conversions.

Example:

A/B testing mobile-specific features, like simplified checkout processes or mobile-first designs, can lead to significant improvements in conversion rates for your mobile audience.

App A/B Testing

If you have a mobile app, A/B testing can be applied to optimize in-app experiences.

Test variations of app interfaces, onboarding flows, or push notifications to increase user engagement and conversions.

By integrating these advanced A/B testing strategies into your conversion optimization toolkit, you can tailor experiences to individual users, ensure cross-device and cross-browser compatibility, and optimize for the mobile landscape.

Conclusion

In the ever-evolving landscape of digital marketing, the pursuit of better conversions is a constant and unrelenting journey.

A/B testing, the time-tested and data-driven strategy we’ve explored in-depth throughout this comprehensive guide, is your compass in this dynamic landscape.

As we bring our journey to a close, let’s recap the invaluable insights and strategies you’ve gathered to propel your conversion optimization efforts to new heights.

A/B testing, also known as split testing, stands as a testament to the power of data-driven decision-making.

It empowers marketers, website owners, and digital strategists with the ability to explore, experiment, and optimize their digital experiences like never before. From tweaking headlines to refining product pages, the possibilities are limitless.

The core purpose of A/B testing is to enhance conversions, a metric that holds unparalleled importance in the world of online success.

Whether you aim to increase sales, boost email sign-ups, or improve user engagement, A/B testing is the road that leads you to your conversion destination.

Our journey began with the essentials. Setting clear objectives, identifying key performance indicators (KPIs), and selecting the right tools and software are the foundational steps of A/B testing.

With these pillars in place, you’re equipped to embark on your testing adventures with a clear sense of direction.

We delved into the structured process of A/B testing, guiding you through hypothesis creation, test design, implementation, data collection, and drawing conclusions. With these steps mastered, you can confidently conduct A/B tests that yield meaningful results and insights.

Avoiding common pitfalls, embracing continuous optimization, and the importance of testing one variable at a time are the guiding principles of effective A/B testing. These best practices ensure that your testing efforts are both reliable and impactful.

Understanding statistical significance, learning from failed tests, and scaling successful changes are the keys to unlocking the true potential of A/B testing. With these skills, you can confidently navigate the intricate world of data analysis and make informed decisions.

Elevating your A/B testing game, we explored advanced strategies such as personalization, segmentation, cross-device, cross-browser testing, and mobile optimization.

These techniques allow you to tailor experiences, ensure compatibility across platforms, and maximize conversions.

A/B testing is not a destination but a journey. It’s a continuous process of refinement and improvement. Each test you conduct, each result you analyze, and each change you implement contributes to the ongoing evolution of your digital presence.

Throughout our journey, we’ve emphasized the importance of ethical considerations and user privacy in A/B testing.

Respecting user privacy and adhering to ethical guidelines and regulatory compliance are non-negotiable principles that must underpin your testing endeavors

As you conclude this exploration into A/B testing, armed with knowledge, tools, and strategies, you’re now prepared to embark on your own adventures in conversion optimization.

The road ahead may be filled with challenges and surprises, but it’s also brimming with opportunities for growth and success.

In the ever-evolving world of digital marketing, one thing remains constant: the pursuit of better conversions.

With A/B testing as your steadfast companion, you’re poised to unravel the mysteries of user behaviour, refine your digital experiences, and ultimately drive better conversions.

So, take the first step, conduct that initial test, and let the data guide you toward your conversion optimization goals.

Remember, this journey is not just about achieving better conversions; it’s about creating better digital experiences for your audience.

It’s about understanding their needs, meeting their expectations, and building lasting relationships. So, keep testing, keep optimizing, and keep delivering exceptional digital experiences that resonate with your audience.

As we part ways on this journey, I leave you with one final piece of advice: Embrace the power of A/B testing, and let your data be your guide.

The path to better conversions is yours to discover, and with A/B testing as your compass, success is within your reach.

Safe travels, and may your conversion optimization adventures be both rewarding and fulfilling.

If you are looking for a top-class digital marketer, then book a free consultation slot here.

If you find this article useful, why not share it with your friends and business partners, and also leave a nice comment below?

We, at the AppLabx Research Team, strive to bring the latest and most meaningful data, guides, and statistics to your doorstep.

To get access to top-quality guides, click over to the AppLabx Blog.

People also ask

What are the key benefits of A/B testing for conversions?

A/B testing offers several benefits for conversions, including improved user experience, increased engagement, higher click-through rates, and ultimately, more conversions. It helps you identify what resonates best with your audience and refine your strategies accordingly.

How long should I run an A/B test to get reliable results?

The duration of an A/B test depends on factors like your traffic volume and the magnitude of the expected changes. Typically, it’s recommended to run tests for at least two to four weeks to capture different user behaviours. Ensure you collect a sufficient sample size for statistically significant results.

Can A/B testing be applied to email marketing?

Absolutely! A/B testing is a valuable tool for optimizing email marketing campaigns. You can experiment with different subject lines, email content, send times, and calls to action to determine what resonates best with your subscribers, leading to higher open rates, click-through rates, and conversions.